In the games of national security, very rarely do intelligence services have complete and empirical information about situations. And mistaken understandings from faulty intelligence collection or analysis often result in costly and dangerous consequences. History is filled with examples of such intelligence errors including assumptions about Iraq’s role in the 9/11 attacks and WMD capabilities, Japanese naval activity before Pearl Harbor, and many others.

Intelligence services dedicate tremendous effort to reduce these errors by collecting information through multiple means when possible, careful vetting of sources, and using highly structured approaches when evaluating ambiguous and often conflicting information.

As one example of this point, intelligence analysts are trained to anticipate the influence of personal biases and the limits of collected information. And when writing reports, disciplined analysts rarely state inferences (conclusions derived from available evidence and reasoning) in absolute terms. Instead, they attribute a probability value (such as 90%, 0.9, HIGH, etc.) to describe their confidence in the accuracy of a statement.

For example, an intelligence analyst might state: “We are 90% confident that Russian military activity on the Ukrainian border indicates preparation for invasion.” Although the analyst may strongly believe this conclusion based on overwhelming evidence, the analyst admits uncertainty and presents the information accordingly.

Perhaps there’s a valuable lesson here for use in spiritual work.

Cultivating awareness of the difference between knowledge and unverified belief is a most valuable practice in the work of self-knowing.

Belief is defined as “something one accepts as true or real; a firmly held opinion or conviction.”

Knowledge is defined as “facts and information acquired through experience.”

In the previous example, the intelligence analyst’s inference about Russians on the Ukrainian border is, in principle, a statement of belief. Not knowledge. The analyst does not ‘see into the mind of Russian leaders’ or possess a crystal ball of omniscience. And the disciplined analyst reports this belief to the customer (decision-makers reading the report) by consciously acknowledging the possibility of error.

To put this idea into practice, here’s an experiment to try…

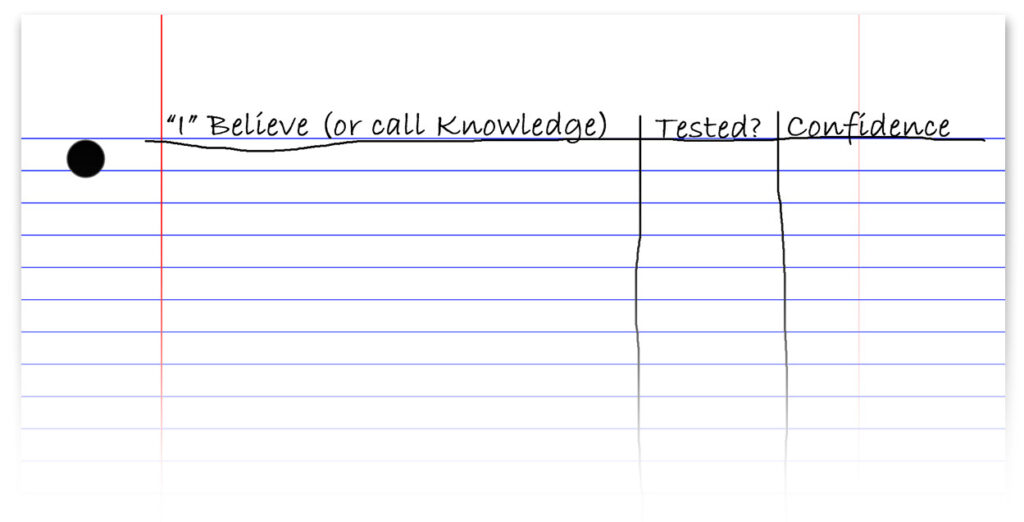

Dedicate a sheet of paper to re-evaluating beliefs and information one personally regards as knowledge. Create three columns:

- ”I” believe (or call knowledge)

- Has this belief been tested?

- What is “my” degree of confidence?

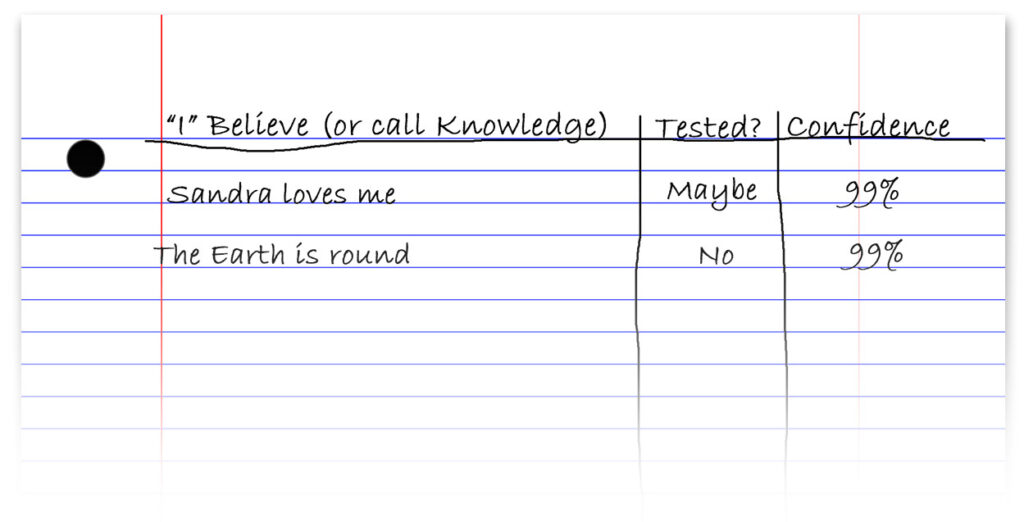

As an example, Craig believes Sandra loves him. He’s tested this hypothesis over the years (and maybe in more ways than one). So a confidence value of 99% is attributed to this belief. Although there’s been plenty of evidence observed during their marriage and he often feels love radiating when she looks at him, Craig doesn’t truly know what goes on in the inner experience of Sandra. So he can’t say 100%. Anything we state with 100% confidence is called knowledge (facts and information acquired directly through experience).

Likewise, Craig believes the Earth is round. He was told the Earth is round in school, watched documentaries about astronomy on TV, seen pictures from NASA, etc. There seems to be plenty of evidence to support a belief that the Earth is round. And at the same time, he has never personally viewed the Earth directly through the window of a spacecraft. Therefore, he accurately states “The Earth is round” as a confident belief. Not as a statement of knowledge.

Work with this for a bit and observe any realizations that emerge.

Although introduced in this post as an experiment in self-knowing, it could also be regarded as an exercise in a beautiful process of liberation described in some traditions as Unknowing.

Unverified belief is a proxy for knowledge. In the absence of complete knowledge about situations, we rely on belief to ‘fill the gap’ and provide context necessary for action. Although belief is essential to our functioning as humans, in our conditioned state, we often invest absolute faith in beliefs and equate them with knowledge. And this state of affairs results in a chaotic and contaminated spiritual body where illusion and falsehood are indistinguishable from truth and knowledge. And the sense of security and self-image (vanity) reinforced by rigid identification with beliefs demands vigilant defense by pride.

The purpose of this exercise is not to determine the truth or falseness of beliefs. Many beliefs are likely true. Others false. The aim of this exercise is simply to cultivate awareness of the difference between beliefs and knowledge, loosen identification with beliefs, and maybe crack open a door for new discovery.

Likewise, the confidence values we assign to beliefs are not very relevant for this experiment. Whether one is 70% or 99% confident is immaterial. Any statement with a value of confidence less than 100% is recognized as belief and disentangled from knowledge.

It’s interesting to see what may happen when one works with this experiment for a while.

Perhaps three of the most liberating words in the English language are “I don’t know.”

1 comment

For me personally, this current crisis is a special time for observing the power of suggestion and its effects on my opinions and those of people around me. Astonishing to see how quickly one descends to a level of having persuaded oneself that one has the correct view of reality, that one’s research has gotten to the crux of things and all others are in error: “How can they be so lazy and ignorant and not do their own research?”, “Jesus!, don’t they see they are being played?”. The violence that is generated in one’s mind on the back of these opinions (taken as knowledge due to the power of the suggestion) is frightening: one can see how most any act of violence can be justified: “they have brought this on themselves, we did warn them. If an innocent population has to be broken to make serve the greater good then so be it!”

Thank you for sharing this.